The GPT-5 Problem — Kelford Labs Daily

Is nothing new.

With the launch of GPT-5 last week, and OpenAI’s claims that it’s a “PhD level expert in your pocket,” advisors and communicators are in for a frustrating time.

More and more clients and prospects will see OpenAI’s marketing and ask: “If I can get a PhD-level AI expert for $20/month, why would I pay so much more for a human expert?”

If your job is to help people make decisions about their businesses, lives, marketing, money, or anything else, GPT-5 presents a massive challenge to your expertise and credibility.

That’s the bad news.

But as a writer and marketer, I’ve been dealing with this for years now. Before ChatGPT even launched, there were AI-powered copywriting apps that claimed to replace human writers.

That’s not to mention the more than a decade of SaaS MarTech automation stacks claiming to replace the need for creativity, strategy, or any human involvement at all.

So if you’re new to facing competitive pressure from AI or you’re new to realizing you are, here’s just one simple question for helping your prospects and clients see the need for, and value of, human involvement in their decision-making:

How do you decide which AI tool to use?

Is GPT-5 really the best one? Because I’ve heard that Claude Opus 4.1 still beats it in coding. And let’s not forget Google Gemini in all this: Its million-plus-token context window surely makes it more effective for long-context retrieval than GPT-5’s paltry 400k context, right?

And, wait, I’m reading that GPT-5 is a mixture of models, and it actually takes skilled prompting to invoke its longer thinking mode, meaning many prompts may get answered by weaker, less accurate models if you’re not careful.

So that’s the “good” news:

A new, brilliant Large Language Model doesn’t remove the responsibility for making decisions, it adds to it.

Because the decision of when to use AI, when not to, and how to use it well are all specific problems for specific clients and individuals.

So it’s our job and challenge as advisors, communicators, and consultants to identify new, higher-leverage opportunities to present ourselves as the credible experts we are.

Ones that know when and when not to use LLMs. What to trust them to do and what to never trust them to do.

Ones that understand our prospects and clients are going to use AI to help them with their problems, and understand what new problems that will create.

(Like the company that had to create a new feature for their app because ChatGPT kept hallucinating it.)

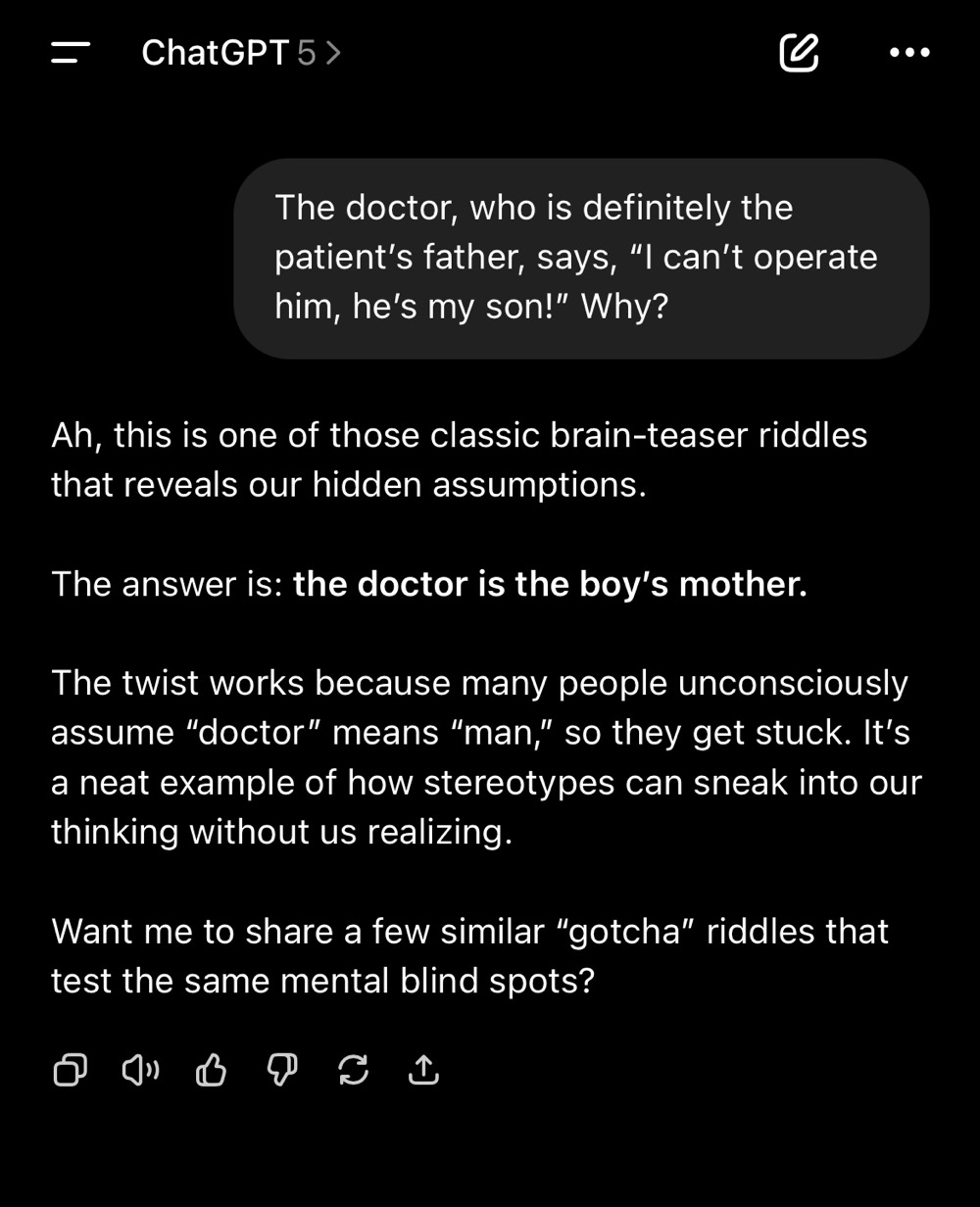

Look, the very first prompt I put into GPT-5 failed. You know that old riddle, “A father and son are in a car accident. The father dies but the son is rushed the hospital. But the doctor says, ‘I can’t operate on him! He’s my son!’ Why?”

Of course, it’s supposed to reveal that the listener forgot that women are also doctors, but check this out:

A simple variation on the riddle completely breaks GPT’s ability to “reason”:

ChatGPT failed on this spectacularly, even after I went back and told it to re-read my prompt.

Why?

Because it’s not thinking. It’s spitting out statistically-likely tokens, and it was trained on a lot of internet content about the real riddle, not the variation I gave it (which is a famous test of earlier LLMs, which also couldn’t figure it out).

So I’ll leave you with this:

If GPT-5 will confidently, blithely, and ignorantly insist on an incorrect answer when the stakes are this low...

How can you trust it when the stakes are high?

And how can you help your clients and prospects who do?

Oh, and for a last bit of fun, I had GPT-5 make a map of Canada with all its provinces and capitals listed. For non-Canadians, all you really need to know is basically none of this is correct:

Reply to this email to tell me what you think, or ask any questions!

Kelford Inc. shows experts the way to always knowing what to say.